Client Stories – QumulusAI

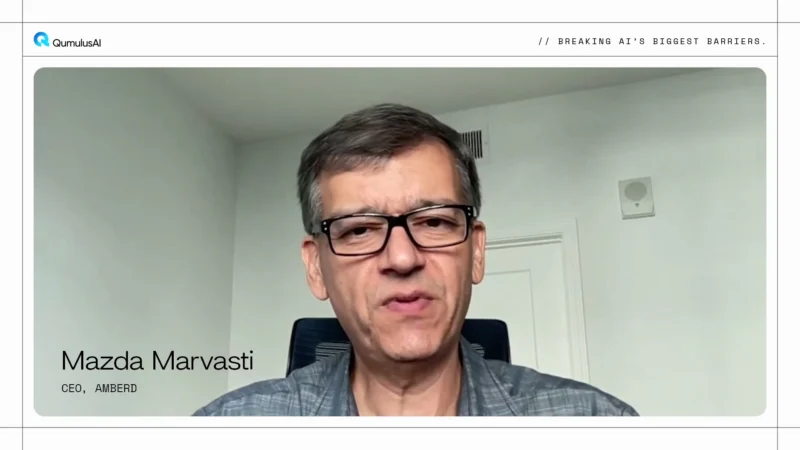

Many organizations struggle to deliver real-time business insights to executives. Traditional workflows require analysts and database teams to extract, prepare, and validate data before it reaches decision makers. That process can stretch across departments and delay critical answers.. Mazda Marvasti, CEO of Amberd, says the cycle to answer a single business question can take…

Latest

Client Stories - QumulusAI

QumulusAI Brings Fixed Monthly Pricing to Unpredictable AI Costs in Private LLM Deployment

Unpredictable AI costs have become a growing concern for organizations running private LLM platforms. Usage-based pricing models can drive significant swings in monthly expenses as adoption increases. Budgeting becomes difficult when infrastructure spending rises with every new user interaction. Mazda Marvasti, CEO of Amberd, says pricing volatility created challenges as his team expanded its…

Client Stories - QumulusAI

Amberd Moves to the Front of the Line With QumulusAI’s GPU Infrastructure

Reliable GPU infrastructure determines how quickly AI companies can execute. Teams developing private LLM platforms depend on consistent high-performance compute. Shared cloud environments often create delays when demand exceeds available capacity Mazda Marvasti, CEO of Amberd, says waiting for GPU capacity did not align with his company’s pace. Amberd required guaranteed availability to support…

Client Stories - QumulusAI

QumulusAI Secures Priority GPU Infrastructure Amid AWS Capacity Constraints on Private LLM Development

Developing a private large language model(LLM) on AWS can expose infrastructure constraints, particularly around GPU access. For smaller companies, securing consistent access to high-performance computing often proves difficult when competing with larger cloud customers. Mazda Marvasti, CEO of Amberd AI, encountered these challenges while scaling his company’s AI platform. Because Amberd operates its own…