Custom AI Chips Signal Segmentation for AI Teams, While NVIDIA Sets the Performance Ceiling for Cutting-Edge AI

Microsoft’s introduction of the Maia 200 adds to a growing list of hyperscaler-developed processors, alongside offerings from AWS and Google. These custom AI chips are largely designed to improve inference efficiency and optimize internal cost structures, though some platforms also support large-scale training. Google’s offering is currently the most mature, with a longer production history and broader training capabilities.

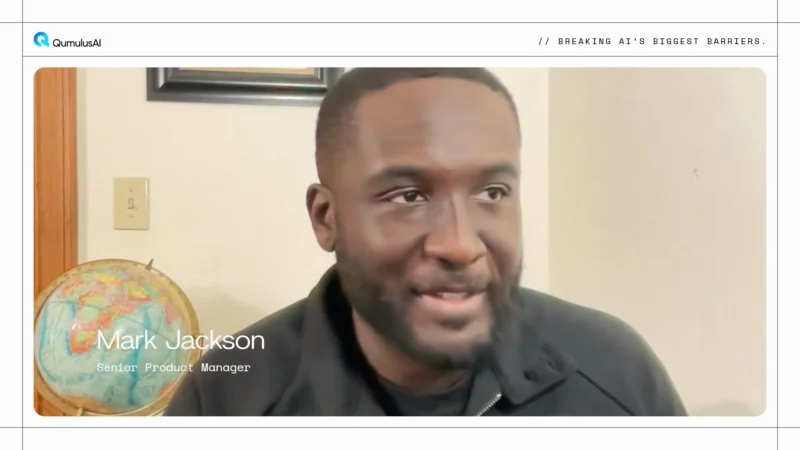

Mark Jackson, Senior Product Manager at QumulusAI, says this shift signals segmentation rather than disruption for AI development teams. He explains that hyperscaler silicon is often optimized for specific workload patterns within a single cloud environment. Jackson notes that NVIDIA GPUs remain the default for frontier training and projects that require cross-cloud flexibility. He adds that NVIDIA’s ecosystem and operational maturity continue to give it an advantage for cutting-edge AI development, while custom chips are deployed in more narrowly optimized scenarios.