NVIDIA Rubin Brings 5x Inference Gains for Video and Large Context AI, Not Everyday Workloads

NVIDIA’s Rubin GPUs are expected to deliver a substantial increase in inference performance in 2026. The company claims up to 5 times the performance of B200s and B300s systems. These gains signal a major step forward in raw inference capability.

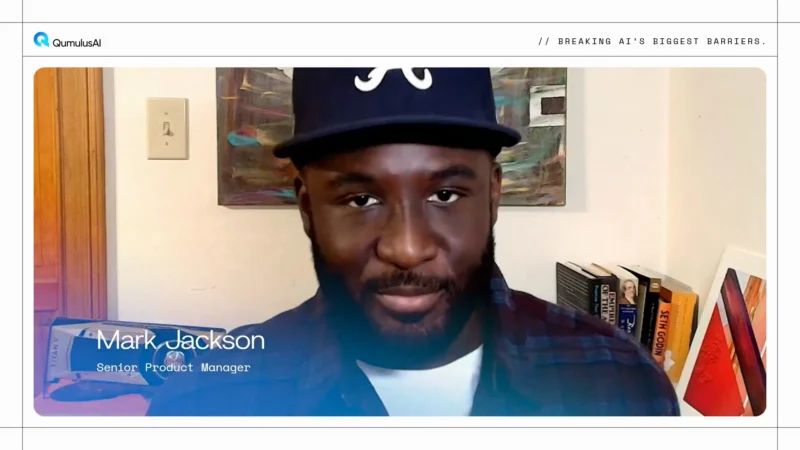

Mark Jackson, Senior Product Manager at QumulusAI, explains that this level of performance is not necessary for most inference workloads. He notes that standard clustered HDX or DGX systems can handle typical jobs effectively. He adds that rack-scale solutions with unified system memory provide clear advantages for large context workloads and video generation. Those environments unlock higher throughput and capabilities that would not be possible in more traditional clustered setups.