Soundbites – Applied Digital

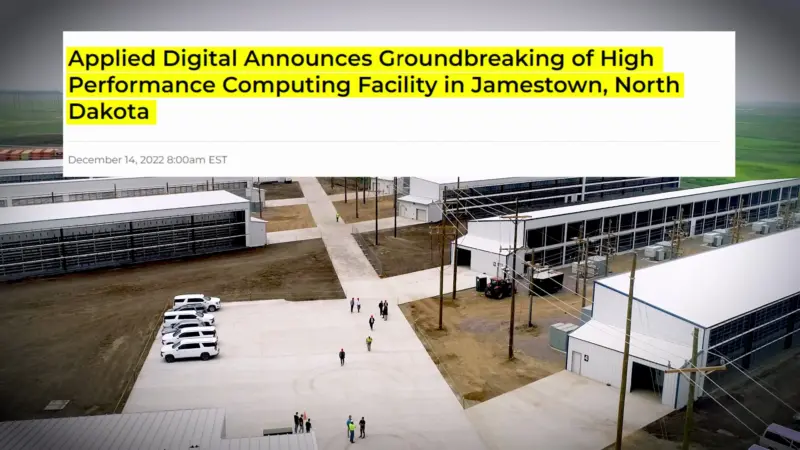

This video follows the major accomplishments of Applied Digital from their IPO in Apr 2022 until October of 2023. It includes major announcements like – Partnerships with NVidia, Supermicro and Hewlett Packard – Opening new facilities in North Dakota – Gaining and onboarding new Cloud clients – Initiation of a Cloud AI service

Latest

Applied Digital

Who is Applied Digital?

Applied Digital is a U.S. based provider of next-generation digital infrastructure, redefining how digital leaders scale high-performance compute (HPC). With dedicated and experienced leadership in the fields of power procurement, engineering, and construction, Applied Digital collaborates with local utilities to solve the problem of congestion on the power grid while stimulating the development of more…

Applied Digital

Wes Cummins on Applied Digital’s Promising Horizon

Wes Cummins,CEO of Applied Digital, shares his enthusiasm for Applied Digital’s future, emphasizing the development of a robust HPC strategy. With their first facility successfully operational in Jamestown and plans to expand further in Ellendale and other yet-to-be-announced locations, he is confident that the company’s future achievements will eclipse its impressive past.